Example Notebook showcasing the different segmentation workflows

This notebook walks you through the different segmentation workflows currently implemented in SPARCSpy using the same input example. Each segmentation workflow needs to be implemented in a seperate SPARCSpy project as the segmentation mask is the starting point for all further downstream steps.

[1]:

import os

import pandas as pd

import matplotlib.pyplot as plt

import h5py

import numpy as np

import tifffile

from sparcscore.pipeline.project import Project

from sparcscore.pipeline.extraction import HDF5CellExtraction

[2]:

#define input images

project_directory = "../../../example_data/example_3/segmentation_workflows"

images = ["../../../example_data/example_3/input_images/Ch1.tif",

"../../../example_data/example_3/input_images/Ch2.tif",

"../../../example_data/example_3/input_images/Ch3.tif"]

[3]:

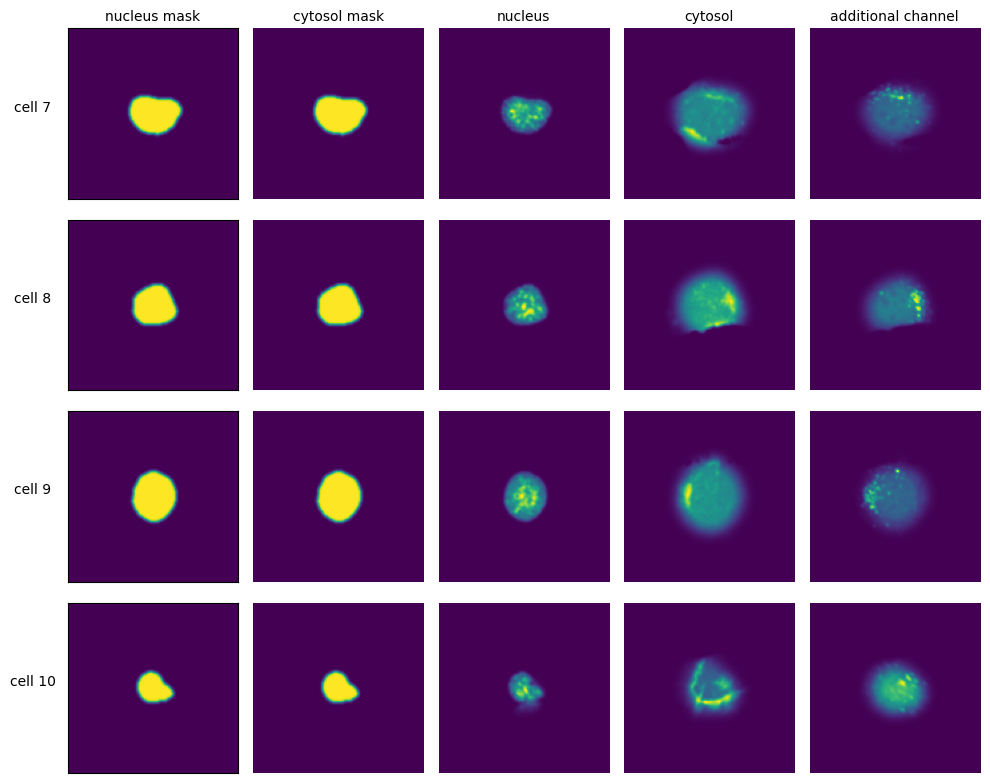

# visualize input images as example

# it is not recommended to execute this block with large input images as it will compute for some time

#read input image to visualize as an example

image = np.array([tifffile.imread(img) for img in images])

def colorize(im, color, clip_percentile=0.0):

"""

Helper function to create an RGB image from a single-channel image using a

specific color.

"""

# Check that we do just have a 2D image

if im.ndim > 2 and im.shape[2] != 1:

raise ValueError('This function expects a single-channel image!')

# Rescale the image according to how we want to display it

im_scaled = im.astype(np.float32) - np.percentile(im, clip_percentile)

im_scaled = im_scaled / np.percentile(im_scaled, 100 - clip_percentile)

im_scaled = np.clip(im_scaled, 0, 1)

# Need to make sure we have a channels dimension for the multiplication to work

im_scaled = np.atleast_3d(im_scaled)

# Reshape the color (here, we assume channels last)

color = np.asarray(color).reshape((1, 1, -1))

return im_scaled * color

def generate_composite(images, colors = [(0, 0, 1),(0, 1, 0), (1, 0, 0), (1, 0, 1)], plot = False):

colorized = []

for image, color in zip(images, colors):

image = colorize(image, color, 0.0)

colorized.append(image)

if plot:

for i in colorized:

plt.figure()

plt.imshow(i)

image = colorized[0]

for i in range(len(colorized)-1):

image += colorized[i+1]

return(np.clip(image, 0, 1))

fig, axs = plt.subplots(1, 4, figsize = (20, 5));

for i, _img in enumerate(image):

axs[i].imshow(_img, cmap='gray')

axs[i].axis("off")

axs[i].set_title("Channel {}".format(i+1))

#also plot and visualize input image

img = generate_composite(image)

axs[3].imshow(img)

axs[3].axis("off");

axs[3].set_title("Composite image");

fig.tight_layout()

WGA Segmentation

This segmentation workflow aims to segment mononucleated cells. Based on a nuclear and cytosolic stain, it first uses a thresholding approach to identify nuclei which are assumed to be the center of each cell. Then in a second step, the center of the identified nuclei are used as a starting point to generate a potential map using the cytosolic stain. This potential map is then used to segment the cytosol using a watershed approach. At the end of the workflow the user obtains both a nuclear and a

cytosolic segmentation mask where each cytosol is matched to exactly one nucleus as kann be identified by the matching cell id.

The configuration file for the WGASegmentation method contains many parameters that need to be optimized for your particular dataset. Here is an example configuration:

WGASegmentation:

input_channels: 3

chunk_size: 50 # chunk size for chunked HDF5 storage. is needed for correct caching and high performance reading. should be left at 50.

lower_quantile_normalization: 0.001

upper_quantile_normalization: 0.999

median_filter_size: 4 # Size in pixels

nucleus_segmentation:

lower_quantile_normalization: 0.01 # quantile normalization of dapi channel before local tresholding. Strong normalization (0.05,0.95) can help with nuclear speckles.

upper_quantile_normalization: 0.99 # quantile normalization of dapi channel before local tresholding. Strong normalization (0.05,0.95) can help with nuclear speckles.

median_block: 41 # Size of pixel disk used for median, should be uneven

median_step: 4

threshold: 0.2 # threshold above local median for nuclear segmentation

min_distance: 8 # minimum distance between two nucleis in pixel

peak_footprint: 7 #

speckle_kernel: 9 # Erosion followed by Dilation to remove speckels, size in pixels, should be uneven

dilation: 0 # final dilation of pixel mask

min_size: 200 # minimum nucleus area in pixel

max_size: 5000 # maximum nucleus area in pixel

contact_filter: 0.5 # minimum nucleus contact with background

wga_segmentation:

threshold: 0.05 # treshold above which cytosol is detected

lower_quantile_normalization: 0.01

upper_quantile_normalization: 0.99

erosion: 2 # erosion and dilation are used for speckle removal and shrinking / dilation

dilation: 7 # for no change in size choose erosion = dilation, for larger cells increase the mask erosion

min_clip: 0

max_clip: 0.2

min_size: 200

max_size: 30000

chunk_size: 50

[4]:

from sparcscore.pipeline.workflows import WGASegmentation

project_location = f"{project_directory}/WGASegmentation"

project = Project(os.path.abspath(project_location),

config_path= "../../../example_data/example_3/config_example3.yml",

overwrite=False,

debug=False,

segmentation_f=WGASegmentation,

extraction_f=HDF5CellExtraction,

)

project.load_input_from_file(images)

modifying config

(3, 1079, 1079)

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/project.py:108: UserWarning: Theres already a directory in the location path

warnings.warn("Theres already a directory in the location path")

[5]:

project.segment()

project.extract()

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/workflows.py:192: RuntimeWarning: invalid value encountered in cast

px_center = np.round(center_nuclei).astype(int)

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/workflows.py:214: RuntimeWarning: invalid value encountered in cast

px_center = np.round(center_nuclei).astype(int)

WARNING:root:Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/temp_mmap__fnlbjyy. Cleanup of this folder is OS dependant, and might need to be triggered manually!

WARNING:root:Folder /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/temp_mmap__fnlbjyy with temp mmap arrays is being deleted.All existing temp mmapp arrays will be unusable!

WARNING:root:New temp folder location. Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapci8bz75y. Cleanup of this folder is OS dependant, and might need to be triggered manually!

extracting classes: 113it [00:00, 192.50it/s]

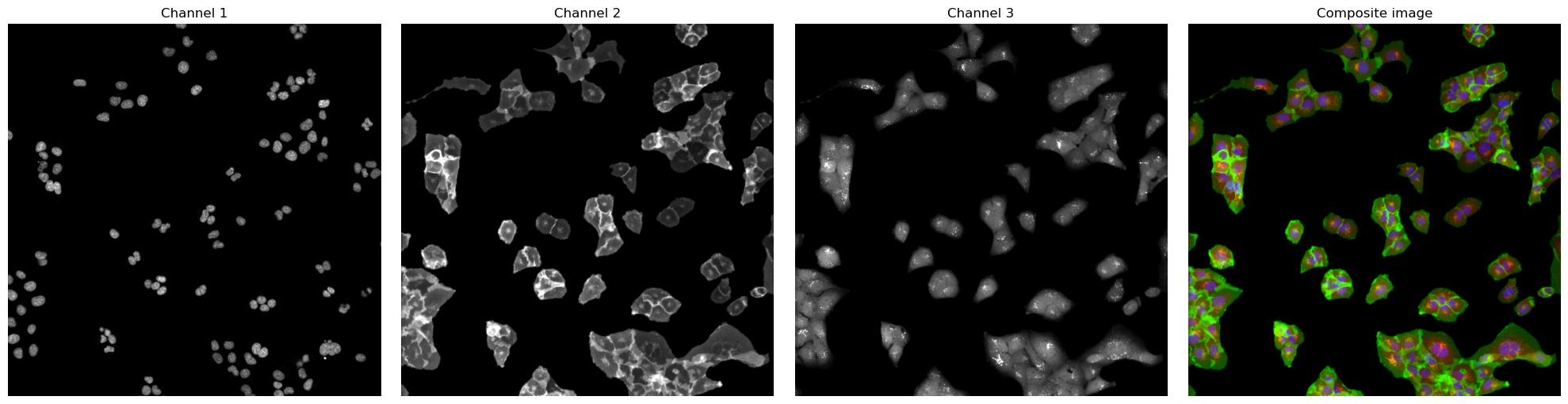

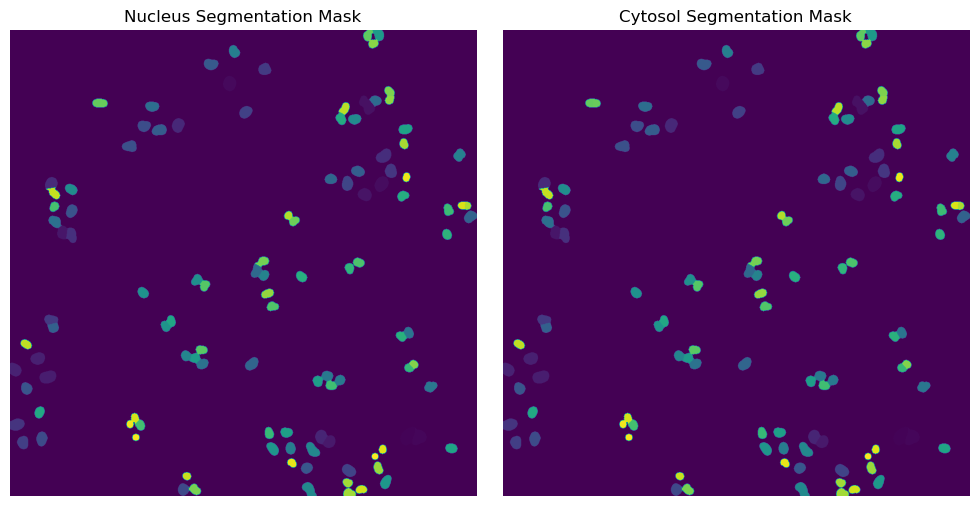

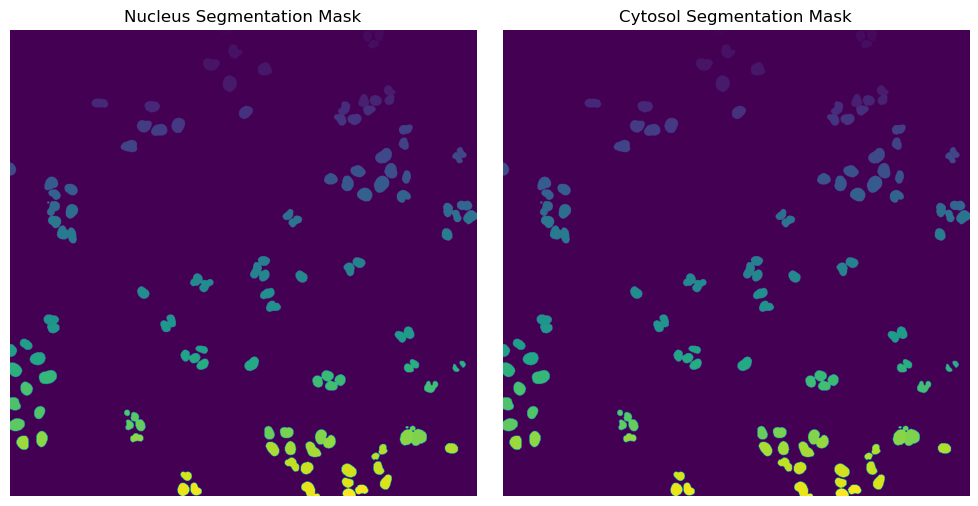

Segmentation Results

[6]:

with h5py.File(f"{project_location}/segmentation/segmentation.h5") as hf:

segmentation = hf.get("labels")

fig, axs = plt.subplots(1, 2, figsize = (10, 5));

axs[0].imshow(segmentation[0])

axs[0].axis("off")

axs[0].set_title("Nucleus Segmentation Mask")

axs[1].imshow(segmentation[1])

axs[1].axis("off")

axs[1].set_title("Cytosol Segmentation Mask")

fig.tight_layout()

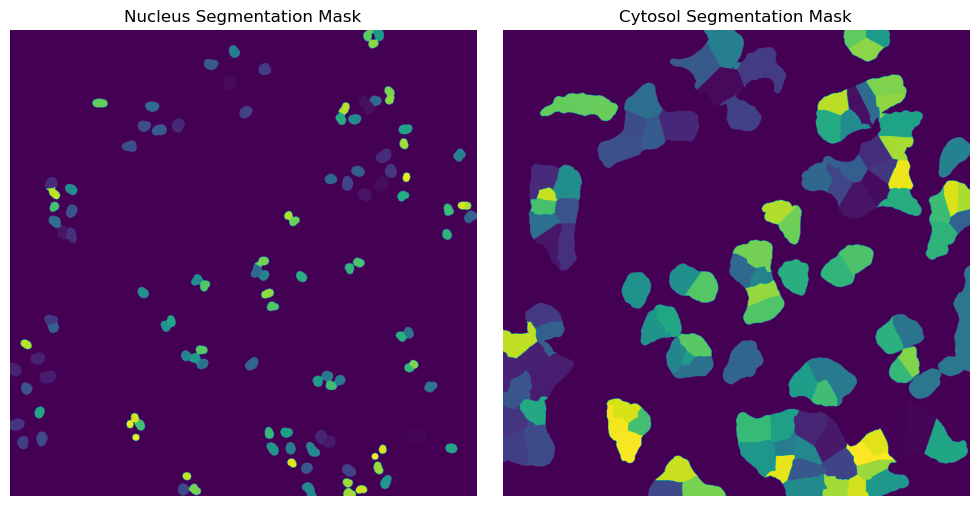

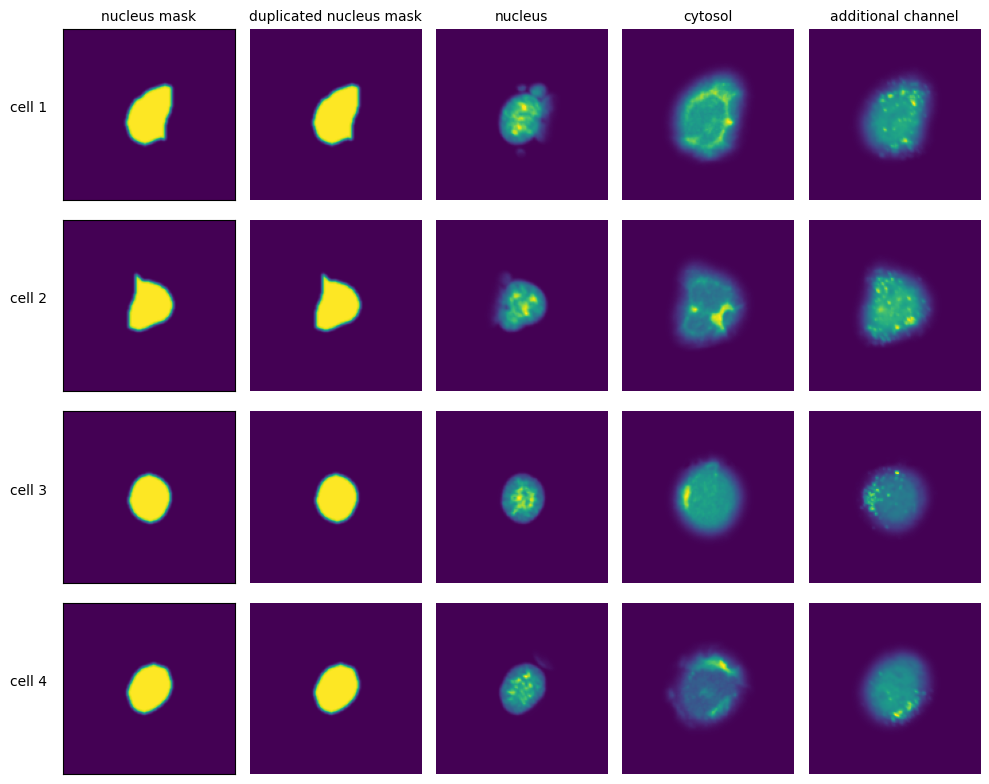

Extraction Results

[7]:

with h5py.File(f"{project_location}/extraction/data/single_cells.h5") as hf:

index = hf.get("single_cell_index")

_images = hf.get("single_cell_data")

n_cells = 4

fig, axs = plt.subplots(n_cells, 5, figsize = (5*2, n_cells*2))

labels = ["nucleus mask", "cytosol mask", "nucleus", "cytosol", "additional channel"]

for i in range(n_cells):

cell_id = index[i][1]

image = _images[i]

for n, _img in enumerate(image):

axs[i, n].imshow(_img)

if n == 0:

axs[i, n].set_ylabel(f"cell {cell_id}", fontsize = 10, rotation = 0, labelpad = 25)

axs[i, n].xaxis.set_visible(False)

axs[i, n].tick_params(left=False, labelleft=False)

else:

axs[i, n].axis("off")

if i == 0:

axs[i, n].set_title(labels[n], fontsize = 10)

fig.tight_layout()

DAPI Segmentation

This segmentation workflow aims to only segment the nuclei of cells.

[8]:

from sparcscore.pipeline.workflows import DAPISegmentation

project_location = f"{project_directory}/DAPISegmentation"

project = Project(os.path.abspath(project_location),

config_path= "../../../example_data/example_3/config_example3.yml",

overwrite=False,

debug=False,

segmentation_f=DAPISegmentation,

extraction_f=HDF5CellExtraction,

)

project.load_input_from_file(images)

modifying config

(3, 1079, 1079)

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/project.py:108: UserWarning: Theres already a directory in the location path

warnings.warn("Theres already a directory in the location path")

[9]:

project.segment()

project.extract()

WARNING:root:Folder /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapci8bz75y with temp mmap arrays is being deleted.All existing temp mmapp arrays will be unusable!

WARNING:root:New temp folder location. Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapfec6aoqr. Cleanup of this folder is OS dependant, and might need to be triggered manually!

extracting classes: 113it [00:00, 188.70it/s]

Segmentation Results

[10]:

with h5py.File(f"{project_location}/segmentation/segmentation.h5") as hf:

segmentation = hf.get("labels")

fig, axs = plt.subplots(1, 2, figsize = (10, 5));

axs[0].imshow(segmentation[0])

axs[0].axis("off")

axs[0].set_title("Nucleus Segmentation Mask")

axs[1].imshow(segmentation[1])

axs[1].axis("off")

axs[1].set_title("Cytosol Segmentation Mask")

fig.tight_layout()

Extraction Results

[11]:

with h5py.File(f"{project_location}/extraction/data/single_cells.h5") as hf:

index = hf.get("single_cell_index")

_images = hf.get("single_cell_data")

n_cells = 4

fig, axs = plt.subplots(n_cells, 5, figsize = (5*2, n_cells*2))

labels = ["nucleus mask", "duplicated nucleus mask", "nucleus", "cytosol", "additional channel"]

for i in range(n_cells):

cell_id = index[i][1]

image = _images[i]

for n, _img in enumerate(image):

axs[i, n].imshow(_img)

if n == 0:

axs[i, n].set_ylabel(f"cell {cell_id}", fontsize = 10, rotation = 0, labelpad = 25)

axs[i, n].xaxis.set_visible(False)

axs[i, n].tick_params(left=False, labelleft=False)

else:

axs[i, n].axis("off")

if i == 0:

axs[i, n].set_title(labels[n], fontsize = 10)

fig.tight_layout()

Cytosol Segmentation Cellpose

This method uses a deep learning based segmentation approach and should optimally be run using a GPU as it is otherwise very slow.

[12]:

from sparcscore.pipeline.workflows import CytosolSegmentationCellpose

project_location = f"{project_directory}/CytosolSegmentationCellpose"

project = Project(os.path.abspath(project_location),

config_path= "../../../example_data/example_3/config_example3.yml",

overwrite=False,

debug=False,

segmentation_f=CytosolSegmentationCellpose,

extraction_f=HDF5CellExtraction,

)

project.load_input_from_file(images)

modifying config

(3, 1079, 1079)

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/project.py:108: UserWarning: Theres already a directory in the location path

warnings.warn("Theres already a directory in the location path")

[13]:

project.segment()

project.extract()

WARNING:root:Folder /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapfec6aoqr with temp mmap arrays is being deleted.All existing temp mmapp arrays will be unusable!

WARNING:root:New temp folder location. Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmap8rm6qs0m. Cleanup of this folder is OS dependant, and might need to be triggered manually!

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/extraction.py:408: RuntimeWarning: invalid value encountered in cast

px_centers = np.round(center_nuclei).astype(int)

WARNING:root:Folder /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmap8rm6qs0m with temp mmap arrays is being deleted.All existing temp mmapp arrays will be unusable!

WARNING:root:New temp folder location. Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapvaorms53. Cleanup of this folder is OS dependant, and might need to be triggered manually!

extracting classes: 109it [00:00, 213.36it/s]

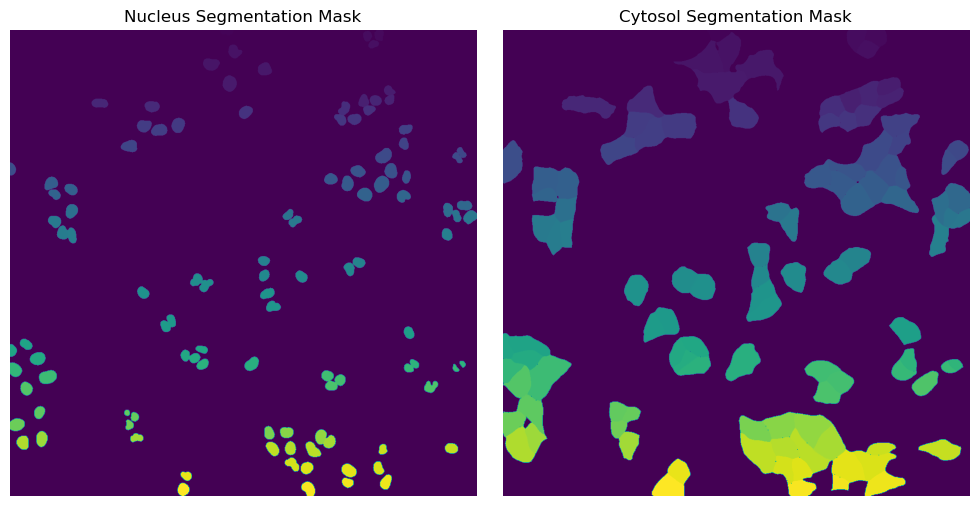

Segmentation Results

[14]:

with h5py.File(f"{project_location}/segmentation/segmentation.h5") as hf:

segmentation = hf.get("labels")

fig, axs = plt.subplots(1, 2, figsize = (10, 5));

axs[0].imshow(segmentation[0])

axs[0].axis("off")

axs[0].set_title("Nucleus Segmentation Mask")

axs[1].imshow(segmentation[1])

axs[1].axis("off")

axs[1].set_title("Cytosol Segmentation Mask")

fig.tight_layout()

Extraction Results

[15]:

with h5py.File(f"{project_location}/extraction/data/single_cells.h5") as hf:

index = hf.get("single_cell_index")

_images = hf.get("single_cell_data")

n_cells = 4

fig, axs = plt.subplots(n_cells, 5, figsize = (5*2, n_cells*2))

labels = ["nucleus mask", "cytosol mask", "nucleus", "cytosol", "additional channel"]

for i in range(n_cells):

cell_id = index[i][1]

image = _images[i]

for n, _img in enumerate(image):

axs[i, n].imshow(_img)

if n == 0:

axs[i, n].set_ylabel(f"cell {cell_id}", fontsize = 10, rotation = 0, labelpad = 25)

axs[i, n].xaxis.set_visible(False)

axs[i, n].tick_params(left=False, labelleft=False)

else:

axs[i, n].axis("off")

if i == 0:

axs[i, n].set_title(labels[n], fontsize = 10)

fig.tight_layout()

DAPI Segmentation Cellpose

This method uses a deep learning based segmentation approach and should optimally be run using a GPU as it is otherwise very slow.

[16]:

from sparcscore.pipeline.workflows import DAPISegmentationCellpose

project_location = f"{project_directory}/DAPISegmentationCellpose"

project = Project(os.path.abspath(project_location),

config_path= "../../../example_data/example_3/config_example3.yml",

overwrite=False,

debug=False,

segmentation_f=DAPISegmentationCellpose,

extraction_f=HDF5CellExtraction,

)

project.load_input_from_file(images)

modifying config

(3, 1079, 1079)

/Users/sophia/Documents/GitHub/SPARCSpy/src/sparcscore/pipeline/project.py:108: UserWarning: Theres already a directory in the location path

warnings.warn("Theres already a directory in the location path")

[17]:

project.segment()

project.extract()

WARNING:root:Folder /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapvaorms53 with temp mmap arrays is being deleted.All existing temp mmapp arrays will be unusable!

WARNING:root:New temp folder location. Temp mmap arrays are written to /var/folders/35/p4c58_4n3bb0bxnzgns1t7kh0000gn/T/./temp_mmapzrcxbt8k. Cleanup of this folder is OS dependant, and might need to be triggered manually!

extracting classes: 128it [00:00, 189.94it/s]

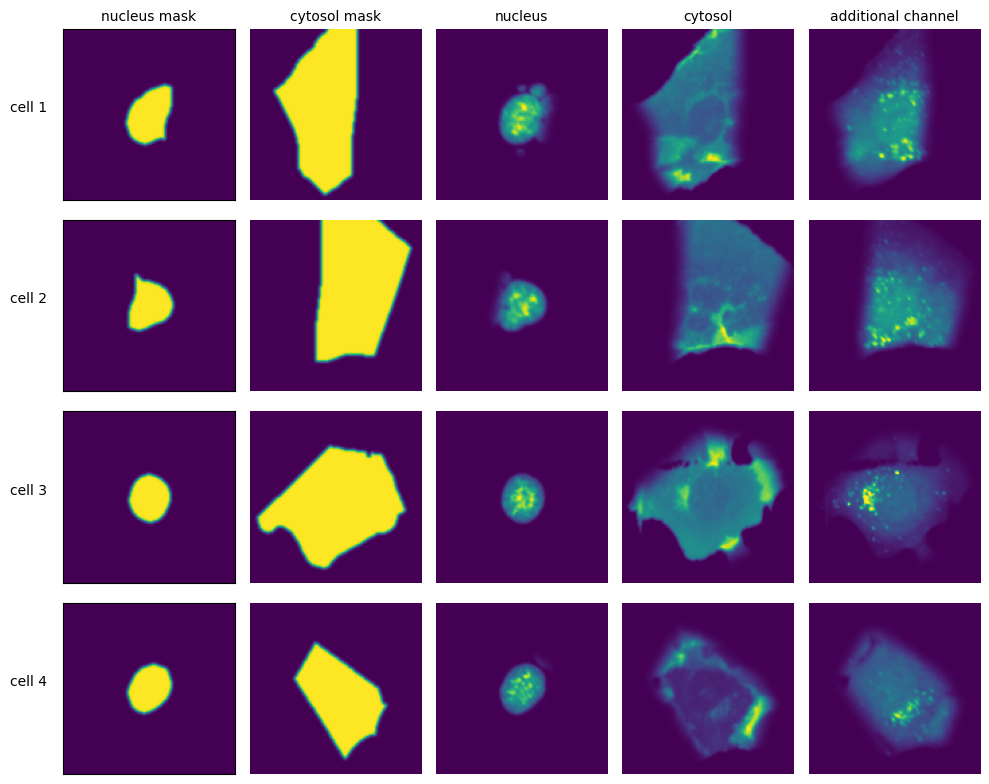

Segmentation Results

[18]:

with h5py.File(f"{project_location}/segmentation/segmentation.h5") as hf:

segmentation = hf.get("labels")

fig, axs = plt.subplots(1, 2, figsize = (10, 5));

axs[0].imshow(segmentation[0])

axs[0].axis("off")

axs[0].set_title("Nucleus Segmentation Mask")

axs[1].imshow(segmentation[1])

axs[1].axis("off")

axs[1].set_title("Cytosol Segmentation Mask")

fig.tight_layout()

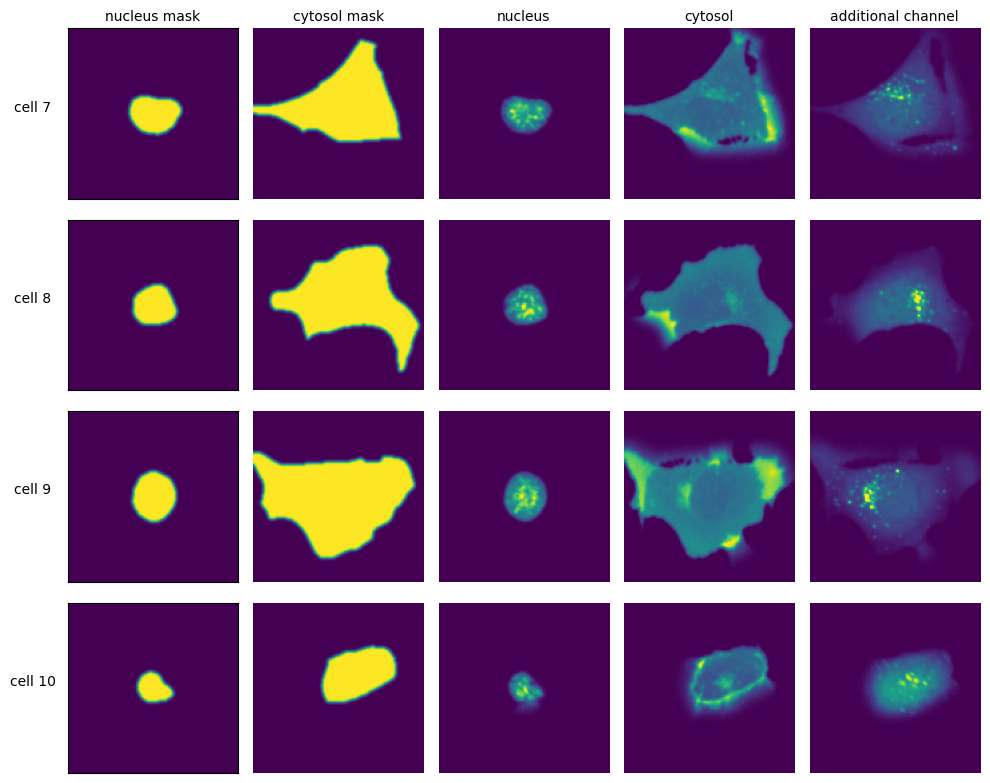

Extraction Results

[19]:

with h5py.File(f"{project_location}/extraction/data/single_cells.h5") as hf:

index = hf.get("single_cell_index")

images = hf.get("single_cell_data")

n_cells = 4

fig, axs = plt.subplots(n_cells, 5, figsize = (5*2, n_cells*2))

labels = ["nucleus mask", "cytosol mask", "nucleus", "cytosol", "additional channel"]

for i in range(n_cells):

cell_id = index[i][1]

image = images[i]

for n, _img in enumerate(image):

axs[i, n].imshow(_img)

if n == 0:

axs[i, n].set_ylabel(f"cell {cell_id}", fontsize = 10, rotation = 0, labelpad = 25)

axs[i, n].xaxis.set_visible(False)

axs[i, n].tick_params(left=False, labelleft=False)

else:

axs[i, n].axis("off")

if i == 0:

axs[i, n].set_title(labels[n], fontsize = 10)

fig.tight_layout()